AI GTM Readiness & Compliance Framework

AI governance doesn’t end inside the model but it extends into how the product is positioned, sold, and understood.

I built this framework after watching teams rush AI features to market without the guardrails, alignment, or clarity needed to protect customers and the business.

This project reflects my belief that responsible AI is just as much a GTM function as it is a technical one. Before a model ever reaches a user, there should be a strategy for how it’s explained, tested, supported, and governed across product, engineering, marketing, sales, legal, and security.

This framework was developed to address a recurring failure pattern: AI features reaching market before governance, risk, and customer narratives were aligned.

This is my playbook for releasing AI features with confidence, transparency, and intention , whether you’re an SMB building your first LLM-powered workflow or an enterprise preparing a public launch.

-

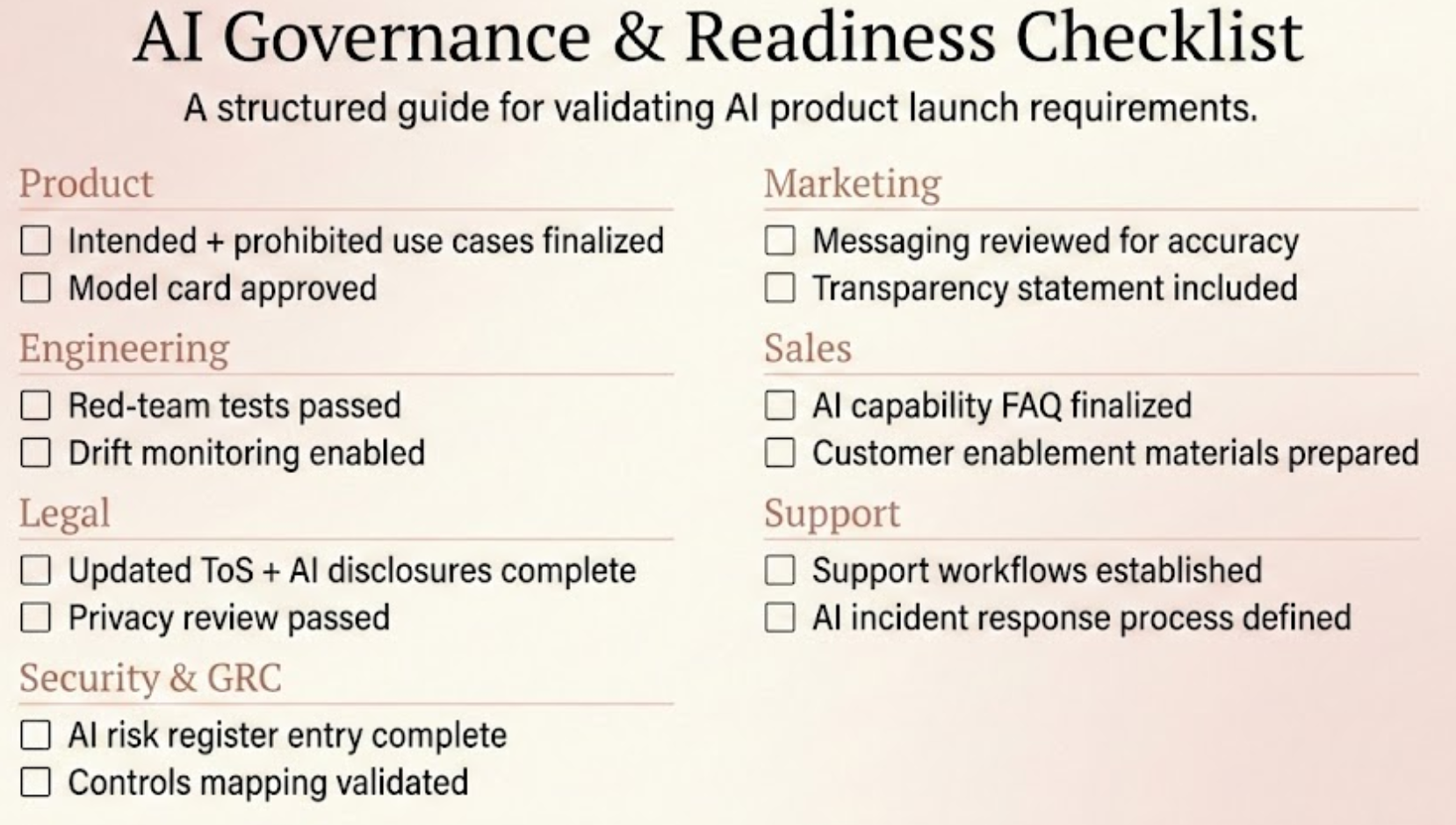

This framework provides a structured GTM readiness and compliance process for AI-powered features.

It ensures teams align before launch, risks are documented early, and customer-facing narratives match internal governance decisions.It includes readiness criteria across:

Product

Engineering

Legal & Privacy

Security & GRC

Marketing

Sales Enablement

Support & Monitoring

-

This work is informed by my experience supporting sales and client negotiations directly, where security requirements often became a gating factor for revenue. By defining engagement tiers, decision thresholds, and performance metrics, the goal is to enable faster go-to-market motion while maintaining a defensible security and risk posture.

In practice, this lens reduces last-minute escalations, shortens security review cycles, and creates clearer yes/no decision paths for sales teams.

-

This work is informed by my experience supporting security and contract transitions during multiple business divestitures, where existing agreements, obligations, and review processes needed to be reassessed under tight timelines. In those environments, security decisions directly affected customer confidence, deal continuity, and revenue stability, reinforcing the need for governance models that scale during organizational change rather than break under pressure.

-

AI features often get pushed to market before:

governance and GTM alignment

risk and messaging alignment

customer expectation mapping

compliance narratives

safety evaluations

lifecycle monitoring strategies

This creates:

Internal misalignment (governance, risk, lifecycle)

External misrepresentation (marketing, sales, customer expectations)

Operational fragility (support, monitoring, incident response)

Companies need a repeatable, cross-functional AI launch process that connects risk to product to GTM.

-

1. Product Readiness

Clear definition of AI functionality

Intended use vs prohibited use

UX expectations

Human-in-the-loop checkpoints

Failure mode identification

Model card availability

2. Engineering Readiness

Performance thresholds

Error handling

Red-team results reviewed

Logging & observability

Drift detection plan

3. Legal & Privacy Review

PII handling

Consent mechanisms

Data retention

Model transparency wording

Liability boundaries

Terms of use updates

4. Security & GRC

AI risk register

Compliance mapping

Deployment controls

Incident escalation flow

Model misuse detection

5. Marketing Alignment

Risk-aware messaging

No overpromising model capability

Ethical marketing patterns

Transparency statements

6. Sales Enablement

Honest capability breakdown

What the model cannot do

Customer FAQs

Use-case boundaries

Competitive positioning

7. Support & Monitoring

Clear paths for AI-related tickets

Escalation to engineering/legal

Customer-facing incident responses

Ongoing evaluation cadence

-

These recommendations are designed to be lightweight, repeatable, and compatible with existing enterprise processes.

Pre-Launch

Cross-functional review meeting

Final safety evaluation

Compliance documentation

Executive sign-off

Launch

GTM alignment

Messaging discipline

Sales + support readiness

Usage monitoring begins

Post-Launch

30/60/90 day AI performance review

Drift & failure mode analysis

Legal + risk reevaluation

Customer feedback loop

-

AI GTM Readiness Checklist (PDF)

Model Card Template

AI Risk Register

Controls Mapping

Transparency Statement Template

Sales Enablement One-Pager

Governance Review Workflow Diagram