Shadow AI Discovery and Governance Intake

I have spent years working at the intersection of security, contracts, and technology, often stepping in after decisions were already made and risk had already taken shape.

What I noticed was simple.

Most AI risk is not malicious.

It is forgotten.

Forgotten during fast vendor onboarding.

Forgotten when features quietly change.

Forgotten when responsibility lives in someone’s inbox instead of the organization.

My work focuses on designing governance systems that remember.

-

AI rarely enters organizations through formal approval channels.

More often, it appears through self serve tools, vendor features enabled by default, or teams experimenting to move faster. By the time security or legal becomes aware, the technology is already embedded into workflows and contracts.

This project establishes a Shadow AI discovery and governance intake layer designed to bring visibility and ownership to AI usage early, while decisions are still flexible.

Not to police.

Not to shame.

But to create memory. -

Most AI governance programs assume AI adoption is intentional and well documented.

In reality, it is not.

AI tools are adopted informally. Contracts include AI capabilities that go unnoticed. Vendors evolve their offerings faster than internal review cycles. Risk is rarely ignored on purpose. It is simply discovered too late.

Without a structured intake process, organizations are left with fragmented visibility, unclear ownership, and reactive decision making.

-

This control treats Shadow AI as a governance signal, not a failure.

The goal is to surface AI usage early, capture context while decisions are still reversible, and route information to the right teams in a way that feels supportive rather than punitive.

The intake layer becomes the starting point for every downstream decision related to AI risk.

-

AI Usage Identified

↓

Governance Intake Triggered

↓

Context Captured

Data type, purpose, vendor, ownership

↓

Risk Signal Interpreted

↓

Low | Logged for awareness

Medium | Routed for security or legal review

High | Escalated for formal assessment

↓

Decision and Evidence Preserved

-

Undocumented AI usage across teams and vendors

Business purpose and operational context

Types of data involved

Ownership and accountability

Risk signals that require follow up

All intake decisions are preserved so context does not disappear when people change roles or leave the organization.

-

Most Shadow AI solutions focus on detection alone.

This control focuses on what happens next.

It is designed to fit into real organizations where people move quickly and governance must keep pace without becoming a blocker.

Core principles:

Discovery is framed as intake, not enforcement

Ownership belongs to the organization, not individuals

Decisions are documented and retrievable

Intake feeds directly into vendor and contract controls

This is how governance becomes sustainable.

-

This control is intentionally lightweight and deployable using existing enterprise tools.

It integrates naturally with:

Intake registries such as Sheets, Airtable, or GRC platforms

Workflow tools like Jira, ServiceNow, or Slack

Evidence repositories including Drive or Confluence

The focus is adoption, not complexity.

-

This intake layer enables earlier visibility into AI usage, reduces downstream remediation, and improves coordination across security, legal, and risk teams.

More importantly, it shifts AI governance from reactive to intentional.

Decisions happen earlier.

Ownership is clear.

Context is preserved. -

Shadow AI Discovery and Governance Intake is the first layer of a broader governance system.

From intake, AI risk flows into:

Vendor AI policy integrity monitoring

Contract intelligence and enforcement

Together, these controls ensure AI risk is visible, owned, and remembered.

-

Good governance does not begin with enforcement.

It begins with awareness, trust, and structure.

This project reflects how AI governance actually works inside real organizations and how it can be designed to scale without losing its human center.

Designed and implemented by Adarian Dewberry, focusing on governance systems that prioritize memory, ownership, and continuity.

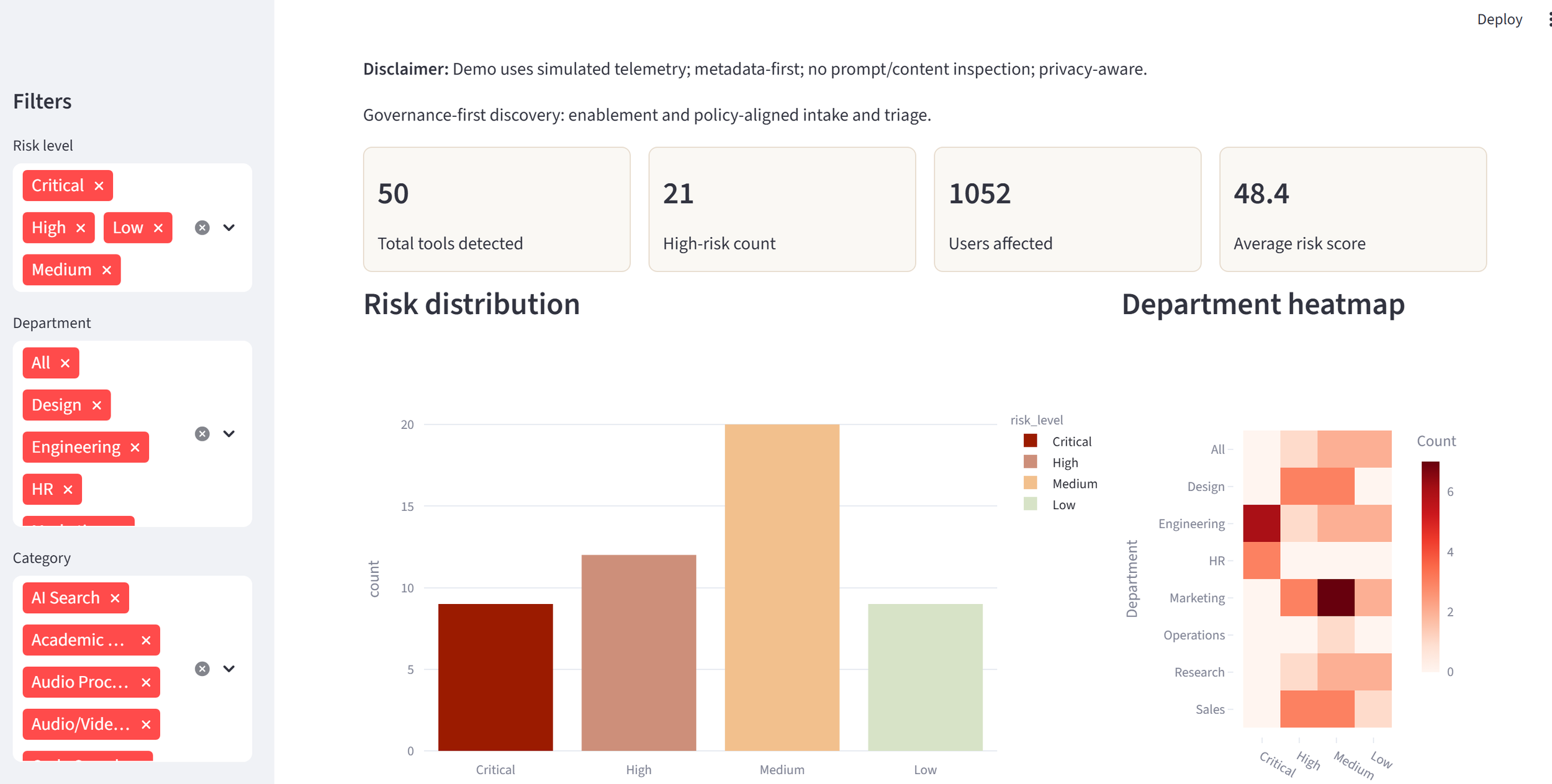

Governance Visibility and Intake Dashboard

To explore how this intake and visibility model could be implemented in practice, I built a lightweight, metadata-first Shadow AI discovery simulation.

The demo illustrates how organizations can identify AI usage patterns, classify risk signals, and support governance workflows without inspecting prompts or user content.

The simulation is designed to demonstrate governance workflow design, not production surveillance.

(Demo is illustrative and reflects governance workflow design rather than production deployment.)